Path: blob/main/quick-start-to-quantum/04_quick_start_to_quantum.ipynb

1133 views

Quick start to Quantum Computing with CUDA-Q

Lab 4 - Converge on a Solution: Write your first hybrid variational program

Labs 3 and 4 break the hybrid variational algorithm into four steps. In the first notebook (lab 3), we cover sections 1 and 2. The second notebook (lab 4) covers the remaining sections.

Section 1: Comparing Classical Random Walks and Discrete Time Quantum Walk (DTQW)

Section 2: Programming a variational DTQW with CUDA-Q

Section 3: Defining Hamiltonians and Computing Expectation Values

Section 4: Identify Parameters to Generate a Targeted Mean Value

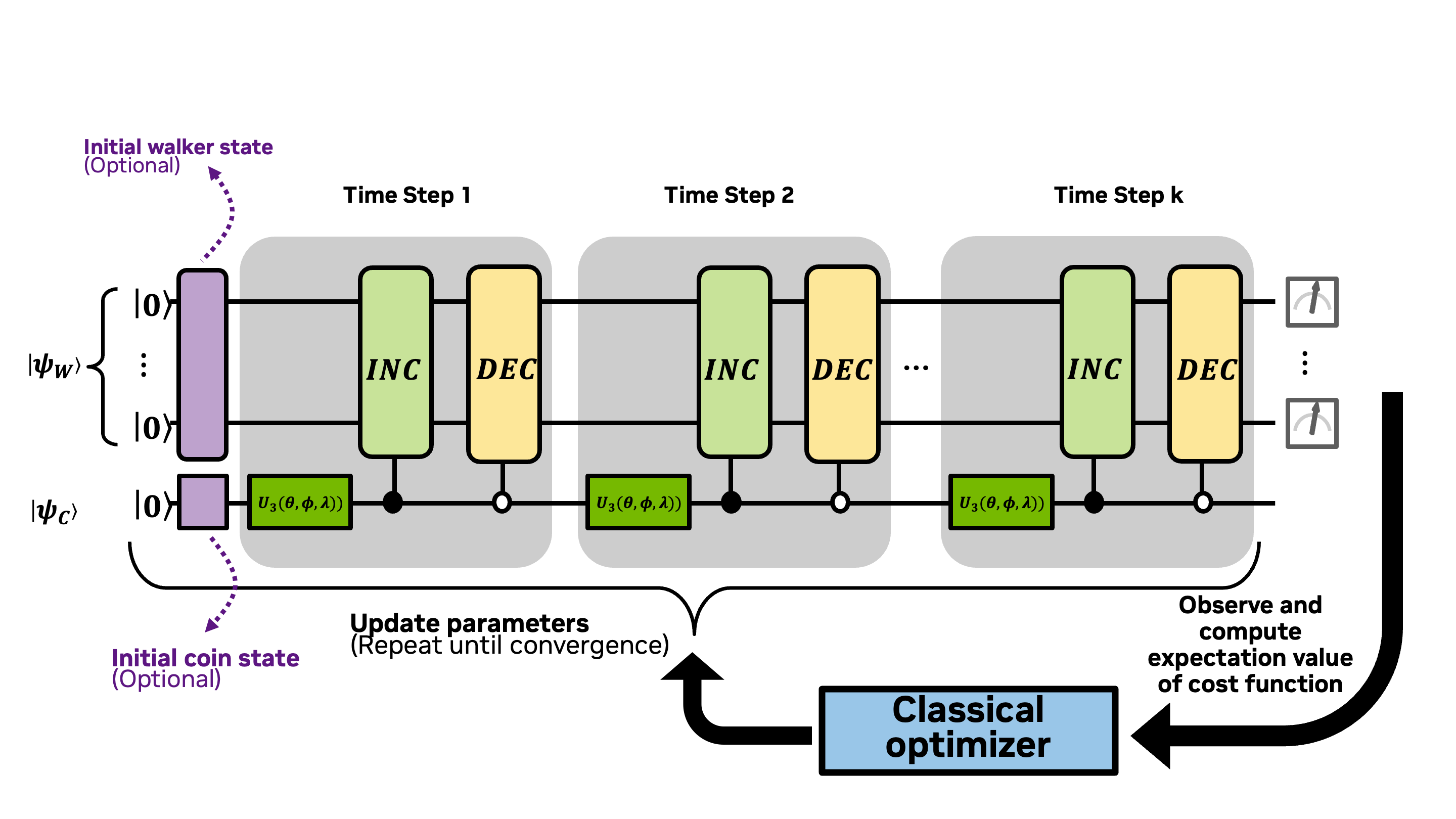

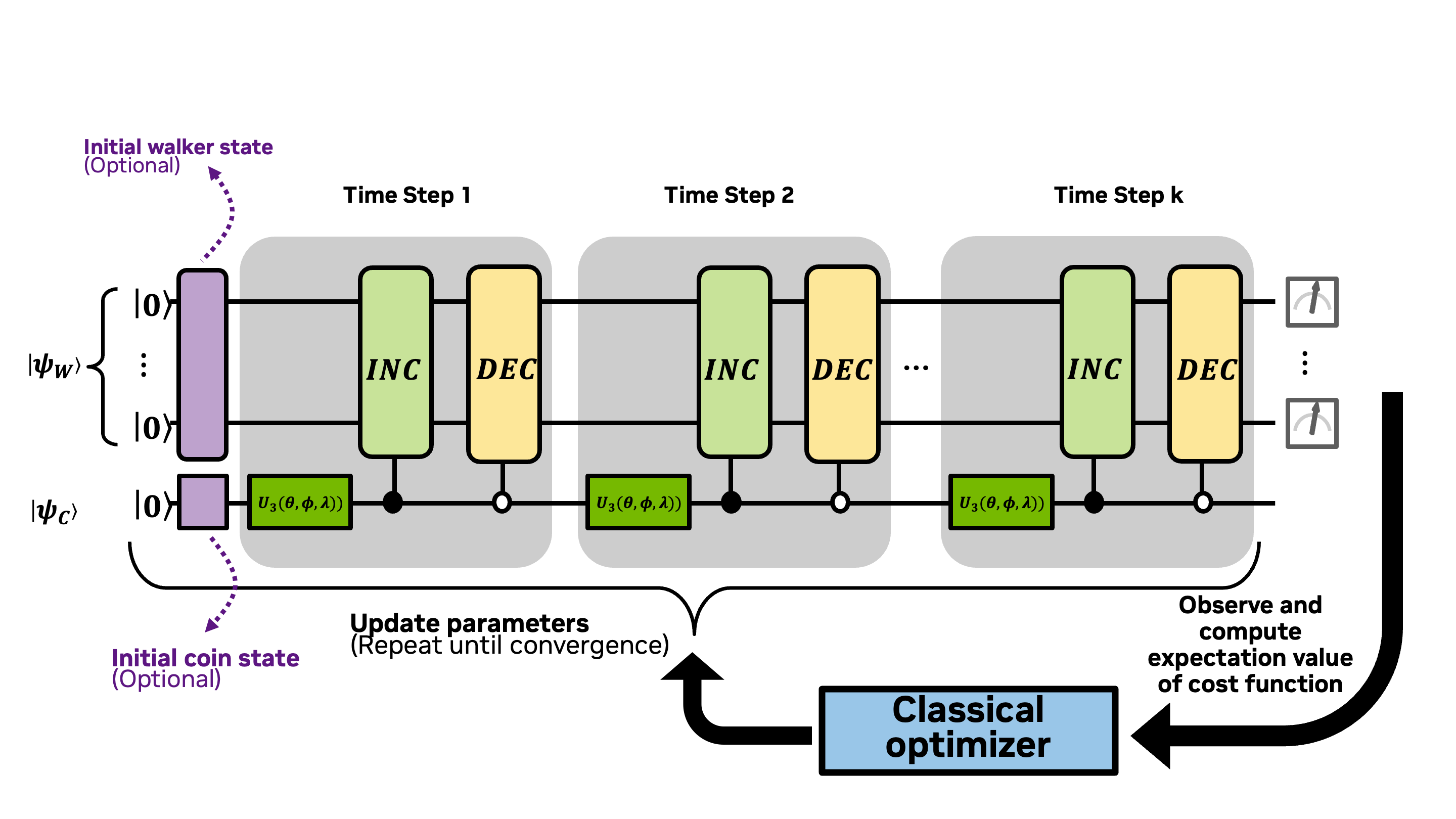

In Lab 3, we coded the parameterized kernel for the discrete time quantum walk (DTQW) using cudaq. In this notebook, we'll compute expectation values and use a classical optimizer to identify parameters to optimize a cost function, completing the coding of the diagram below:

What you'll do:

Define and visualize Discrete Time Quantum Walks for generating probability distributions

Add variational gates for the coin operators to create your first variational program

Construct a Hamiltonian that can be used to compute the average of the probability distribution generated by a quantum walk

Use a classical optimizer to identify optimal parameters in the variational quantum walk that will generate a distribution with a targeted average value

Terminology you'll use:

Computational basis states (basis states for short)

Probability amplitude

Statevector

Control and target for multi-qubit controlled-operations

Hamiltonian

Pauli-Z operator

CUDA-Q syntax you'll use:

quantum kernel function decoration:

@cudaq.kernelqubit initialization:

cudaq.qvectorandcudaq.qubitquantum gates:

x,h,t, _.ctrl,cudaq.register_operation,u3spin operators:

spin.z,spin.iextract information from a kernel:

sample,get_state, _.amplitude,observeoptimize parameters in a variational quantum algorithm:

cudaq.optimizers

🎥 You can watch a recording of the presentation of a version of this notebook from a GTC DC tutorial in October 2025.

Let's begin by installing the necessary packages.

We've copied over the functions from Lab 3.1 that we'll need here. Make sure you execute this cell below before continuing.

Section 3 Defining Hamiltonians and Computing Expectation Values

(Sections 1 and 2 can be found in Lab 3)

A significant challenge in quantum computing is the efficient encoding of classical data into quantum states that can be processed by quantum hardware or simulated on classical computers. This is particularly crucial for many financial applications, where the first step of a quantum algorithm often involves efficiently loading a probability distribution. For instance, to enable lenders to price loans more accurately based on each borrower's unique risk profile, it is essential to estimate individual loan risk distributions (such as default and prepayment) while accounting for uncertainties in modeling and macroeconomic factors. Efficiently implementing this process on a quantum computer requires the ability to load a log-normal or other complex distribution into a quantum state (Breeden and Leonova).

Financial markets exhibit complex probability distributions and multi-agent interactions that classical models struggle to capture efficiently. Quantum walks can be used to generate probability distributions of market data. The quantum walk approach offers:

Flexible modeling of price movements

Better representation of extreme events compared to classical methods

Ability to capture asymmetric return distributions (Backer et al).

The Problem

This tutorial explores how to load a probability distribution with a given property (in this case, a fixed mean) into a quantum state. By following this example, you will gain the essential knowledge needed to comprehend more sophisticated algorithms, such as the multi-split-step quantum walks (mSSQW) method, as described by Chang et al. The mSSQW technique can be employed to load a log-normal distribution, which is useful for modeling the spot price of a financial asset at maturity.

Pedagogical Remark: The reason why we chose to examine the coarser problem of generating a distribution with a targeted mean as opposed to generating a targeted distribution itself (as in Chang et al.) is that by considering the mean of the distribution we can introduce the concept of expectation value of a Hamiltonian which is central to many variational algorithm applications in chemistry, optimization, and machine learning.

Let's begin by examining the final step in the quantum program template, which involves taking measurements and interpreting the results:

Up to this point, for this step of the quantum program, we utilized cudaq.get_state and cudaq.sample to read out a statevector from the quantum kernel. However, this approach is not always practical, especially considering that the dimension of the statevector scales exponentially with the number of qubits. For example, describing the statevector for qubits would require storing amplitudes, which is extremely memory-intensive. In many quantum algorithms, we do not need a complete description of the statevector; instead, partial information like an "average" value often suffices. This is where the concept of expectation value comes in.

Illustrative Example of Expectation Value

Before we delve into our DTQW example, let's make this idea of "average" value of a quantum state more explicit by considering a specific example.

Take the quantum state , where for all . The probability amplitudes for the computational basis states of this example are graphed below:

Expectation Values

We can think of this as a probability distribution of a random variable and compute the expectation value (i.e., average or mean). Graphically, we can determine that the expectation value is the position halfway between and (i.e., between the states and ). Analytically, we'd find this by computing , where is the probability of measuring the state . In this example we'd get

Let's look into how, in general, we can deduce the expectation value from a state . We know that , by definition of the probability amplitudes. Therefore, to complete the computation, we need an operation to translate from to .

This is where the Hamiltonian comes in. We won't formally define a Hamiltonian in its full generality here (you can read more about Hamiltonians in Scott Aaronson's lecture notes). For this tutorial, we will consider a Hamiltonian to be a matrix that operates on a -qubit quantum state through matrix multiplication. This matrix has certain additional properties, which we will not focus on for now. For the purposes of this tutorial, all you need to know is that has the properties:

for

is linear, that is for and states and .

We've left the explicit formula for in the optional box below. But assuming that we have such an operation, then we can define and denote the expectation value of this Hamiltonian with respect to a state as where the notation is read as "bra phi" and comes from the bra-ket notation. It represents the conjugate transpose of the ket, . In other words, bra- () is equal to . Placing next to in the equation above represents the action of taking the dot product of with .

Let's compute for the equal superposition state graphed above. By our choice of and its linearity, we get that

Next combining this with via a dot product, we get

which is exactly the expectation value that we found analytically at the beginning of this section.

Optional: The particular Hamiltonian that we need is the one that has the property . In other words, the computational basis states are eigenvalues of the Hamiltonian and the eigenvalue of is . The Hamiltonian that has this property is where is the matrix product of with . A more useful formulation of is where is the identity matrix and is the -dimensional matrix which applies the to the qubit and holds all other qubits constant. For example applying to the state results in and applied to is . With some matrix algebra, you can verify the following equality holds for :

Computing Expectation Values with observe

The observe function is used to compute expectation values of Hamiltonians. Suppose that the state is defined by a cudaq.kernel called my_kernel, and is a Hamiltonian stored as a cudaq.operator, named my_hamiltonian. We can compute the expectation value using observe(my_kernel, my_hamiltonian).expectation(). For observe to work, the kernel shouldn't contain any measurements of the state of interest — otherwise, the state of the kernel would have collapsed to one of the basis states. We can store as a cudaq.operator using cudaq.spin.z and cudaq.spin.i along with + and scalar multiplication *. Let's walk through computing the expectation value for a probability distribution represented by a quantum state in the code block below.

As anticipated, the expectation value of the equal superposition state is computed to be 7.5. In this section we introduced ideas that are essential for many other applications of variational quantum algorithms. In particular, we introduced the Hamiltonian for computing the average value of a quantum state if we identify the computational basis states with integers. We also demonstrated how to compute the expectation value of the Hamiltonian applied to a quantum state using the observe command. In the next section, we'll apply all of this to our DTQW problem.

Expectation value of a DTQW

We are now prepared to apply what we've learned to the problem of finding a Discrete-Time Quantum Walk (DTQW) that yields a probability distribution with a specific mean. Let's aim to generate a distribution from a quantum walk with a mean of . In the code block below, you'll find a parameterized kernel for the DTQW based on the code that we developed in Lab 3. We've just added a flag so that we both sample and compute expectation values with the kernel.

Do your best to adjust the parameter values to achieve a state with an average close to . It's challenging, isn't it? There are just so many combinations of parameter values to experiment with!

Section 4 Identify Parameters to Generate a Targeted Mean Value

We have now arrived at the need for the variational quantum algorithm (see the diagram below). We can initialize the kernel with some parameter values, and then turn the problem over to a classical optimizer to search for new parameter values that minimize a cost function (sometimes referred to as an error function). In our case we want to minimize the difference between and our targeted mean of . For the cost function, we'll use the mean square error (MSE) as our metric for closeness between the expectation value and the targeted mean.

The code block below defines the cost function and creates a list to record its values at each iteration of the loop.

Below we use our built-in optimization suite (cudaq.optimizers) to minimize the cost function. Specifically, we will select the gradient-free Nelder Mead classical optimization algorithm.

Let's plot the cost values for each iteration of the variational algorithm to see the convergence.

Finally, let's use the optimal parameters to carry out the DTQW to verify that we have generated a probability distribution with a mean close to 3.

Completion of the Quick Start to Quantum Computing Series

Congratulations! You've successfully written your first variational quantum algorithm. You're now prepared to explore more advanced examples and applications.

Additional Challenges and Exercises

To further develop your skills, consider the following problems:

Parameterized DTQW: Modify the existing DTQW code to incorporate different parameters in the coin flip operation

u3at each step. In other words each step of the DTQW might have a different coin operator. You can build upon the code developed in Lab 3 and 4.Targeted Distribution Generation: Adapt the variational algorithm to generate a targeted distribution. To do this, you will need to sample the distribution at each stage and use the mean square error between the sampled distribution and the targeted distribution as your cost function. Hint: For this problem, you will use the

samplecommand instead of theobservecommand and you will not need to define a Hamiltonian.Varying the quantum walk: Consider variations to the DTQW by introducing an additional coin flip in between steps right and left (i.e., between the controlled-

INCand controlled-DECoperations).

The animation below demonstrates what is possible by solving problems 1-3 above to model financial data:

Future Directions and Resources

Now that you have a solid foundation in variational quantum algorithms, you can explore more advanced topics and applications, including:

Multi-split-step quantum walk for financial data: Apply the variational quantum algorithm template to generate probability distributions for financial data. You can learn more about this example in notebooks 1 and 2 of the CUDA-Q Academic Quantum Applications to Finance series.

Quantum Approximate Optimization Algorithm (QAOA): Learn about QAOA, circuit cutting, and GPU acceleration as applied to the max cut optimization problem.

Variational Quantum Eigensolver (VQE): Discover how VQE can be used to find the ground state of a quantum system.

Hybrid quantum neural networks: Explore the intersection of quantum computing and machine learning.

To take your quantum computing skills to the next level, consider learning about accelerating quantum computing and expanding simulation capabilities. The Accelerating Quantum Computing: A Step-by-Step Guide provides a comprehensive resource to help you achieve this goal.