Path: blob/master/2019-fall/slides/02_getting_data_into_r.ipynb

2715 views

DSCI 100 - Introduction to Data Science

Lecture 2 - Getting data into R

2019-09-12

Housekeeping

Pin on Piazza about viewing fresh notebooks & how to upload to JupyterHub

Please fill out our pre-course survey

password: dsci100

your responses are anonymous to the instructors

will not affect your course grade

the survey closes next Thursday, September 19

Most/all folks who were registered before the due date have been graded for

worksheet_01Late registrant assignments are still being graded

You will get feedback forms for each assignment telling you which questions were correct/incorrect. They are coming soon!

Recap of last week

Introduction to

R programming and Jupyter notebooks

a sprinkle of data analysis

UBC's sketchy wifi

Today

Taking our first step in data analysis: loading data into R!

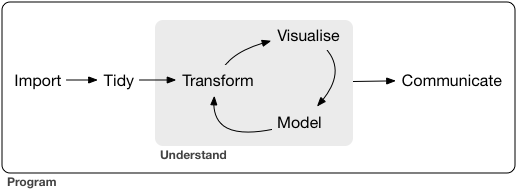

In the data science workflow (source: Grolemund & Wickham, R for Data Science)

Loading/importing data

4 most common ways to do this in Data Science

read in a text file with data in a spreadsheet format

read from a database (e.g., SQLite, PostgreSQL)

scrape data from the web (optional bonus material)

use a web API to read data from a website (not covered in DSCI100)

Different ways to locate a file / dataset

Local (on your computer)

An absolute path locates a file with respect to the "root" folder on a computer

starts with

/, e.g./home/trevor/documents/timesheet.xlsx

A relative path locates a file relative to your working directory

doesn't start with

/, e.g.documents/timesheet.xlsx

(working directory is/home/trevor/)

Remote (on the web)

via "URL" that starts with http:// or https://

http://traffic.libsyn.com/mbmbam/MyBrotherMyBrotherandMe367.mp3

Demo: Loading data from your computer

Workflow:

make the dataset accessible to the computer

might need to load a package, download a file, connect to a database

inspect the data using Jupyter to see what it looks like

load the data into R

using

read_csv,read_delim,tbl, etc

inspect the result to make sure it worked

the

headfunction is useful here

Let's load the Old Faithful geyser dataset from Larry Wasserman's book All of Statistcs

Note about loading data

It's important to do it carefully + check results after!

will help reduce bugs and speed up your analyses down the road

Think of it as tying your shoes before you run; not exciting, but if done wrong it will trip you up later!

Questions?

Go for it!

Class activity:

In the group at your table, try to read in this dataset from the web:

https://archive.ics.uci.edu/ml/machine-learning-databases/00236/seeds_dataset.txt

What did we learn?

What did we learn, Winter 2019

read_table2allows us to read multiple whitespace delimited filesread the terms of service before scraping

when to use the different forms

read_delim(orread_*)

Note on web scraping

More and more websites don't want you scraping

They instead are providing "easier" ways for you to access the data as opposed to scraping it (which they can regulate and know who you are)

So, TL;DR read the Terms of Service for ANY webpage you are planning on scraping

they're long to read, so search for "scraping", "auto", "bot", etc to find the relevant section