Path: blob/master/2019-spring/slides/06_classfication_intro.ipynb

2707 views

DSCI 100 - Introduction to Data Science

Lecture 6 - Classification, an introduction using k-nearest neighbours

2019-02-07

First, a little housekeeping

Quiz grading will be finished Monday.

Feedback forms will now be returned to you on the server where you do your homework. At some point today in your home you will see a

feedbackfolder. We will put all the forms there.

Please fill out the mid-course survey (and if you already have THANK-YOU)!

Assignment to groups for group project has been done (see Canvas), and all have been given a private GitHub repository

Classification problem

Can we use data we have seen in the past, to predict something about the future?

For example, the diagnosis class of tumour cells with Concavity = 2 and Perimeter = 2?

K-nearest neighbours classification algorithm

In order to classify a new observation using a k-nearest neighbor classifier, we have to do the follow steps:

Compute the distance between the new observation and each observation in our training set

Sort the data table in ascending order according to the distances.

Choose the top rows of the sorted table.

Classify the new observation based on majority vote.

Classification problem

How is this problem represented as a data table in R?

Data table for example above

| = diagnosis | = Concavity | = Perimeter |

|---|---|---|

| M | 2.1 | 2.3 |

| M | -0.1 | 1.5 |

| B | -0.2 | -0.2 |

| ... | ... | ... |

Where:

is our class label/target/outcome/response variable

the 's are our predictors/features/attributes/explanatory variables, and we have 2 of these

we have 569 observations (sets of measurements about tumour cells)

Data table for example above

| = diagnosis | = Concavity | = Perimeter | = Symmetry |

|---|---|---|---|

| M | 2.1 | 2.3 | 2.7 |

| M | -0.1 | 1.5 | -0.2 |

| B | -0.2 | -0.2 | 0.12 |

| ... | ... | ... |

Where:

is our class label/target/outcome/response variable

the 's are our predictors/features/attributes/explanatory variables, and we have 2 of these

we have 569 observations (sets of measurements about tumour cells)

Classification data table (general)

What does our general data table look like in the classification setting?

| ... | |||||

|---|---|---|---|---|---|

| ... | |||||

| ... | |||||

| ... | ... | ... | ... | ... | ... |

| ... |

Where:

is our class label/target/outcome/response variable

the 's are our predictors/features/attributes/explanatory variables, and we have of these

we have observations

Introduction to caret package in R

Steps to doing k-nn with caret in R:

Split your data table of training data into (make this a vector) and 's (make this a

data.framenot atibble)

"Fit" your model to the data by:

choose and create a

data.framewith one column (namedk) and one value (your choice for )use

trainand feed it , , the method ("knn"), and

Predict using your model by using

predictand passing it your model object and the new observation (as adata.frame)

Code example:

Split your data table of training data into and 's

"Fit" your model to the data:

Predict using your model

Unanswered questions at this point:

How do we choose

k? (answer coming next week...)

Is our model any good?

"All models are wrong, but some are useful" -- George Box

... but we should try to say how useful (more coming next week...)

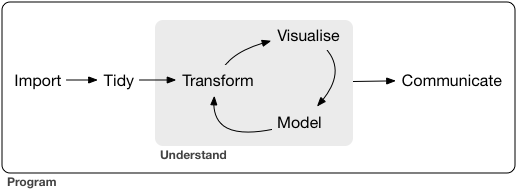

Go forth and ... model?

Class challenge

Suppose we have a new observation in the iris dataset, with petal length = 5 and petal width = 0.6. Using R and the caret package, how would you classify this observation based on nearest neighbours using the predictors petal length and petal width.