Copyright Information

Lab 1: Intro to PyTorch and Music Generation with RNNs

In this lab, you'll get exposure to using PyTorch and learn how it can be used for deep learning. Go through the code and run each cell. Along the way, you'll encounter several TODO blocks -- follow the instructions to fill them out before running those cells and continuing.

Part 1: Intro to PyTorch

0.1 Install PyTorch

PyTorch is a popular deep learning library known for its flexibility and ease of use. Here we'll learn how computations are represented and how to define a simple neural network in PyTorch. For all the labs in Introduction to Deep Learning 2026, there will be a PyTorch version available.

Let's install PyTorch and a couple of dependencies.

1.1 What is PyTorch?

PyTorch is a machine learning library, like TensorFlow. At its core, PyTorch provides an interface for creating and manipulating tensors, which are data structures that you can think of as multi-dimensional arrays. Tensors are represented as n-dimensional arrays of base datatypes such as a string or integer -- they provide a way to generalize vectors and matrices to higher dimensions. PyTorch provides the ability to perform computation on these tensors, define neural networks, and train them efficiently.

The shape of a PyTorch tensor defines its number of dimensions and the size of each dimension. The ndim or dim of a PyTorch tensor provides the number of dimensions (n-dimensions) -- this is equivalent to the tensor's rank (as is used in TensorFlow), and you can also think of this as the tensor's order or degree.

Let’s start by creating some tensors and inspecting their properties:

Vectors and lists can be used to create 1-d tensors:

Next, let’s create 2-d (i.e., matrices) and higher-rank tensors. In image processing and computer vision, we will use 4-d Tensors with dimensions corresponding to batch size, number of color channels, image height, and image width.

As you have seen, the shape of a tensor provides the number of elements in each tensor dimension. The shape is quite useful, and we'll use it often. You can also use slicing to access subtensors within a higher-rank tensor:

1.2 Computations on Tensors

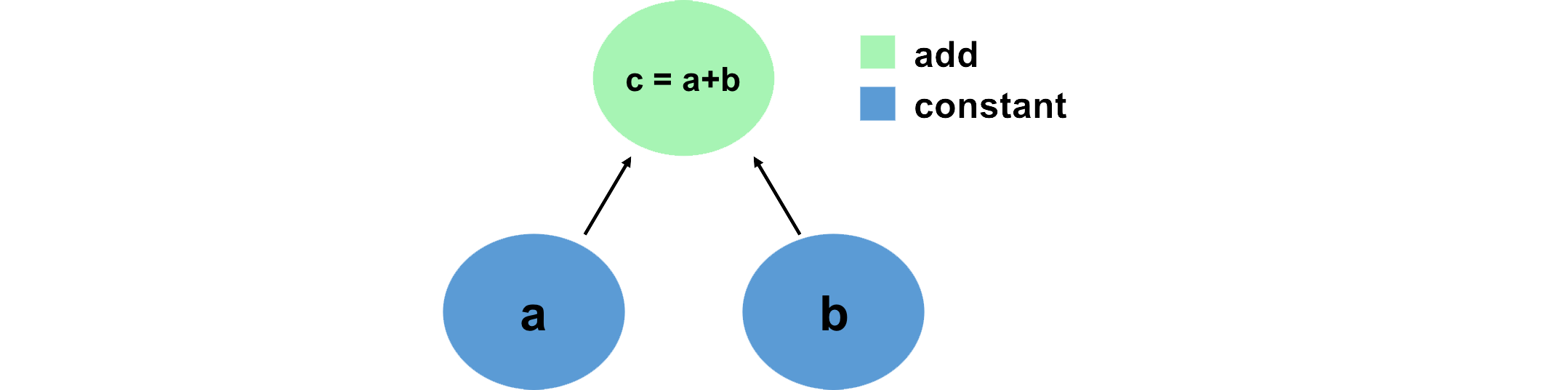

A convenient way to think about and visualize computations in a machine learning framework like PyTorch is in terms of graphs. We can define this graph in terms of tensors, which hold data, and the mathematical operations that act on these tensors in some order. Let's look at a simple example, and define this computation using PyTorch:

Notice how we've created a computation graph consisting of PyTorch operations, and how the output is a tensor with value 76 -- we've just created a computation graph consisting of operations, and it's executed them and given us back the result.

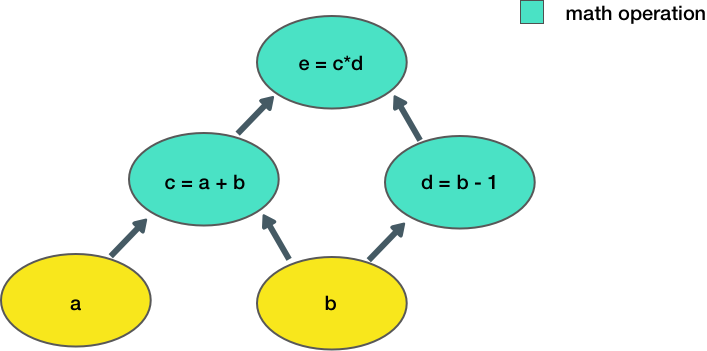

Now let's consider a slightly more complicated example:

Here, we take two inputs, a, b, and compute an output e. Each node in the graph represents an operation that takes some input, does some computation, and passes its output to another node.

Let's define a simple function in PyTorch to construct this computation function:

Now, we can call this function to execute the computation graph given some inputs a,b:

Notice how our output is a tensor with value defined by the output of the computation, and that the output has no shape as it is a single scalar value.

1.3 Neural networks in PyTorch

We can also define neural networks in PyTorch. PyTorch uses torch.nn.Module, which serves as a base class for all neural network modules in PyTorch and thus provides a framework for building and training neural networks.

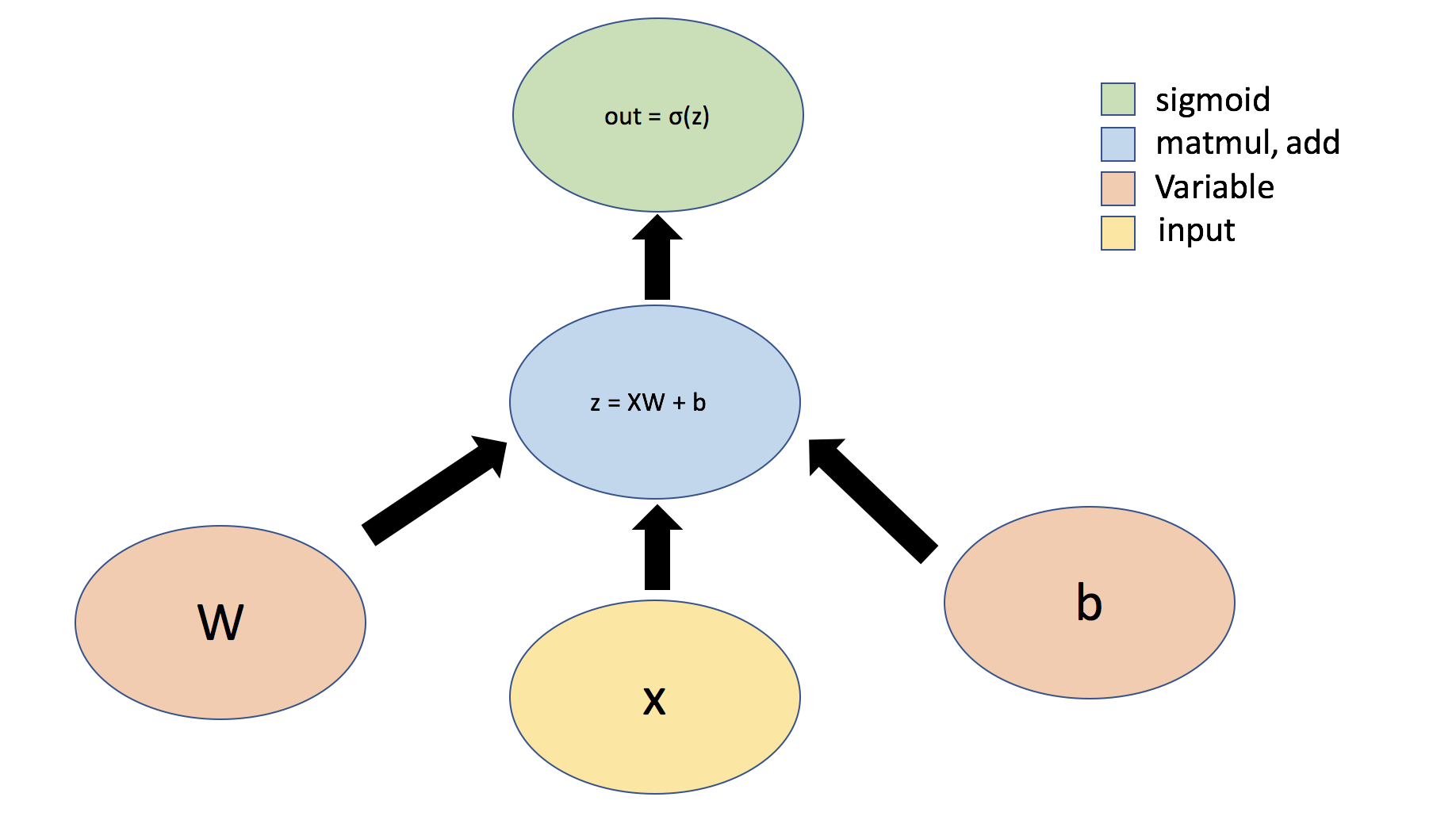

Let's consider the example of a simple perceptron defined by just one dense (aka fully-connected or linear) layer: , where represents a matrix of weights, is a bias, is the input, is the sigmoid activation function, and is the output.

We will use torch.nn.Module to define layers -- the building blocks of neural networks. Layers implement common neural networks operations. In PyTorch, when we implement a layer, we subclass nn.Module and define the parameters of the layer as attributes of our new class. We also define and override a function forward, which will define the forward pass computation that is performed at every step. All classes subclassing nn.Module should override the forward function.

Let's write a dense layer class to implement a perceptron defined above.

Now, let's test the output of our layer.

Conveniently, PyTorch has defined a number of nn.Modules (or Layers) that are commonly used in neural networks, for example a nn.Linear or nn.Sigmoid module.

Now, instead of using a single Module to define our simple neural network, we'll use the nn.Sequential module from PyTorch and a single nn.Linear layer to define our network. With the Sequential API, you can readily create neural networks by stacking together layers like building blocks.

We've defined our model using the Sequential API. Now, we can test it out using an example input:

With PyTorch, we can create more flexible models by subclassing nn.Module. The nn.Module class allows us to group layers together flexibly to define new architectures.

As we saw earlier with OurDenseLayer, we can subclass nn.Module to create a class for our model, and then define the forward pass through the network using the forward function. Subclassing affords the flexibility to define custom layers, custom training loops, custom activation functions, and custom models. Let's define the same neural network model as above (i.e., Linear layer with an activation function after it), now using subclassing and using PyTorch's built in linear layer from nn.Linear.

Let's test out our new model, using an example input, setting n_input_nodes=2 and n_output_nodes=3 as before.

Importantly, nn.Module affords us a lot of flexibility to define custom models. For example, we can use boolean arguments in the forward function to specify different network behaviors, for example different behaviors during training and inference. Let's suppose under some instances we want our network to simply output the input, without any perturbation. We define a boolean argument isidentity to control this behavior:

Let's test this behavior:

Now that we have learned how to define layers and models in PyTorch using both the Sequential API and subclassing nn.Module, we're ready to turn our attention to how to actually implement network training with backpropagation.

1.4 Automatic Differentiation in PyTorch

In PyTorch, torch.autograd is used for automatic differentiation, which is critical for training deep learning models with backpropagation.

We will use the PyTorch .backward() method to trace operations for computing gradients. On a tensor, the requires_grad attribute controls whether autograd should record operations on that tensor. When a forward pass is made through the network, PyTorch builds a computational graph dynamically; then, to compute the gradient, the backward() method is called to perform backpropagation.

Let's compute the gradient of :

In training neural networks, we use differentiation and stochastic gradient descent (SGD) to optimize a loss function. Now that we have a sense of how PyTorch's autograd can be used to compute and access derivatives, we will look at an example where we use automatic differentiation and SGD to find the minimum of . Here is a variable for a desired value we are trying to optimize for; represents a loss that we are trying to minimize. While we can clearly solve this problem analytically (), considering how we can compute this using PyTorch's autograd sets us up nicely for future labs where we use gradient descent to optimize entire neural network losses.

Now, we have covered the fundamental concepts of PyTorch -- tensors, operations, neural networks, and automatic differentiation. Fire!!

Visit MIT Deep Learning

Visit MIT Deep Learning Run in Google Colab

Run in Google Colab View Source on GitHub

View Source on GitHub