Path: blob/main/deep-learning-specialization/course-1-neural-networks-and-deep-learning/Week 3 Quiz - Shallow Neural Networks.md

70620 views

Week 3 Quiz - Shallow Neural Networks

1. Which of the following are true? (Check all that apply.)

is a matrix with rows equal to the parameter vector of the first layer.

is a matrix with rows equal to the transpose of the parameter vectors of the first layer

denotes the activation vector of the second layer for the third example.

is the column vector of parameters of the third layer and fourth neuron.

is the column vector of parameters of the fourth layer and third neuron.

📌 The vector is the column vector of parameters of the i-th layer and j-th neuron of that layer.denotes the activation vector of the second layer

📌 In our convention denotes the activation function of the j-th layer.denotes the activation vector of the second layer for the third example.

is the row vector of parameters of the fourth layer and third neuron.

2. The tanh activation is not always better than sigmoid activation function for hidden units because the mean of its output is closer to zero, and so it centers the data, making learning complex for the next layer. True/Flase?

True

Flase

3. Which of these is a correct vectorized implementation of forward propagation for layer l, where 1 ≤ l ≤ L?

📌 ,

4. You are building a binary classifier for recognizing cucumbers (y=1) vs. watermelons (y=0). Which one of these activation functions would you recommend using for the output layer?

sigmoid

ReLU

Leaky ReLU

tanh

5. Consider the following code:

What will be y.shape?

(3,1)

(3,)

(1,2)

(2,)

6. Suppose you have built a neural network. You decide to initialize the weights and biases to be zero. Which of the following statements is true?

Each neuron in the first hidden layer will perform the same computation in the first iteration. But after one iteration of gradient descent they will learn to compute different things because we have "broken symmetry"

Each neuron in the first hidden layer will perform the same computation. So even after multiple iterations of gradient descent each neuron in the layer will be computing the same thing as other neurons.

Each neuron in the first hidden layer will compute the same thing, but neurons in different layers will compute different things, thus we have accomplished “symmetry breaking” as described in lecture.

The first hidden layer’s neurons will perform different computations from each other even in the first iteration; their parameters will thus keep evolving in their own way.

7. Logistic regression's weights should be initialized randomly rather than to all zeros, because if you initialize to all zeros, then logistic regression will fail to learn a useful decision boundary because it will fail to "break symmetry", True/False?

True

False

8. Which of the following is true about the ReLU activation functions?

They cause several problems in practice because they have no derivative at 0. That is why Leaky ReLU was invented.

They are the go to option when you don't know what activation function to choose for hidden layers.

They are increasingly being replaced by the tanh in most cases.

They are only used in the case of regression problems, such as presicting house prices.

9. Consider the following 1 hidden layer neural network:

Which of the following statements are True? (Check all that apply).

will have shape (2, 1)

will have shape (4, 1)

will have shape (1, 2)

will have shape (2, 4)

will have shape (4, 2)

will have shape (2, 1)

10. Consider the following 1 hidden layer neural network:

**What are the dimensions of and ?

and are (2, m)

...

11. The use of the ReLU activation function is becoming more rare because the ReLU function has no derivative for c=0. True/False?

True

False

12. Suppose you have built a beural network with one hidden layer and tanh as activation function for the hidden layers. Which of the following is a best option to initialize the weights?

Initialize all weights to 0.

Initialize the weights to large random numbers.

Initialize all weights to a single number chosen randomly.

Initialize the weights to small random numbers.

13. A single output and single layer neural network that uses the sigmoid function as activation is equivalent to the logistic regression. True/False

False

True

📌 The logistic regression model can be expressed by . this is the same as .

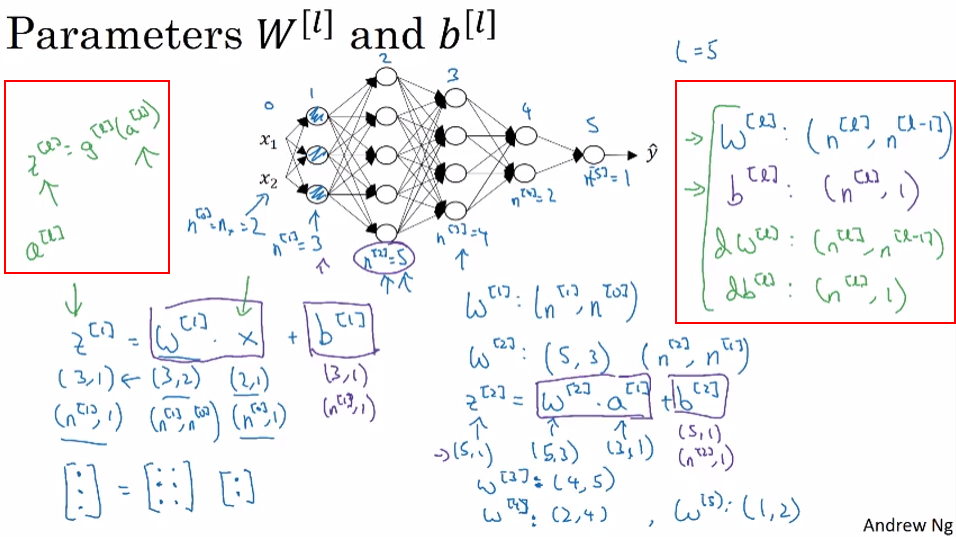

Prameters and