Path: blob/master/material/differential-equations.ipynb

1354 views

Differential Equations

Differential equations is without doubt one of the more useful branch of mathematics in science. They are used to model problems involving the change of some variable with respect to another. Differential equations cover a wide range of different applications, ranging from ordinary differential equations (ODE) until boundary-value problems involving many variables. For the sake of simplicity, throughout this section we shall cover only ODE systems as they are more elemental and equally useful. First, we shall cover first order methods, then second order methods and finally, system of differential equations.

See:

http://pages.cs.wisc.edu/~amos/412/lecture-notes/lecture21.pdf

http://pages.cs.wisc.edu/~amos/412/lecture-notes/lecture22.pdf

Infectious Disease Modelling:

Understanding the models that are used to model Coronavirus: Explaining the background and deriving the formulas of the SIR model from scratch. Coding and visualizing the model in Python.

Physical motivation

Momentum

In absence a forces a body moves freely to a constant velocity (See figure)

The quantity which can be associated with the change of speed of a body is the instantaneous momentum of a particle of mass moving with instantaneous velocity , given by and m/s is the speed of light.

If , or equivalent, sif :

Equation of motion

Any change of the momentum can be atribuited to some force, . In fact, the Newton's second law can be defined in a general way as

The change of the momentum of particle is equal to the net force acting upon the system times the duration of the interaction

For one instaneous duration , this law can be written as or as the equation of motion, which is the differential equation This equation of motion is of general validity and can be applied numerically to solve any problem.

Constant mass

In the special case of constant mass

Example: Drag force

For a body falling in the air, in addition to the gravitiy force , there is a dragging force in the opposite direction given by [see here for details] where

: is the frontal area on a plane perpendicular to the direction of motion—e.g. for objects with a simple shape, such as a sphere

is the density of the air

: Drag coefficient. for a baseball

: speed of the baseball

: momentum of baseball

The equation of motion is

We see then that, in general

Mathematical background

Ordinary differential equations normally implies the solution of an initial-value problem, i.e., the solution has to satisfy the differential equation together with some initial condition. Real-life problems usually imply very complicated problems and even non-soluble ones, making any analytical approximation unfeasible. Fortunately, there are two ways to handle this. First, for almost every situation, it is generally posible to simplify the original problem and obtain a simpler one that can be easily solved. Then, using perturbation theory, we can perturbate this solution in order to approximate the real one. This approach is useful, however, it depends very much on the specific problem and a systematic study is rather complicated.

The second approximation, and the one used here, consists of a complete numerical reduction of the problem, solving it exactly within the accuracy allowed by implicit errors of the methods. For this part, we are going to assume well-defined problems, where solutions are expected to be well-behaved.

Euler's method

First order differential equations

This method is the most basic of all methods, however, it is useful to understand basic concepts and definitions of differential equations problems.

Suppose we have a well-posed initial-value problem given by:

Now, let's define a mesh points as a set of values where we are going to approximate the solution . These points can be equal-spaced such that

Here, is called the step size of the mesh points.

Now, using the first-order approximation of the derivative that we saw in Numerical Differentiation, we obtain

or

The original problem can be rewritten as

where the notation has been introduced and the last term (error term) can be obtained taking a second-order approximation of the derivative. is the value that give the maximum in absolute value for

This equation can be solved recursively as we know the initial value .

Second order differential equations

The formalism of the Euler's method can be applied for any system of the form:

However, it is possible to extend this to second-order systems, i.e., systems involving second derivatives. Let's suppose a general system of the form:

For this system we have to provide both, the initial value and the initial derivative .

Now, let's define a new variable , the previous problem can be then written as

Each of this problem has now the form required by the Euler's algorithm, and the solution can be computed as:

Euler method for second order differential equations

We can define the column vectors such that

Newton's second law of motion

In fact, the equation of motion can be seen as the system of equations

Example:

An object of 0.5 Kg is launched from the top of a building of 50 m with an horizontal speed of 5 m/s. Study the evolution of the movement

Neglecting the air friction

Remember that in Python an string is also a list:

We can use the str attribute

Consider now the case the movement inside a fluid with a dragging force proportinal to the velocity (low velocity case) where can take the values 0 (not dragging force), 0.1 y 0.5

Apply a mask upon the DataFrame:

Example

Filter

c==0

Activity: Find the time to reach the position y=0 in each case. By using an algorithm to find roots

Activity: Repeat the previous analysis for a baseball in the air with a dragging force proportinal to the square of the velocity. The diameter of the baseball is 7.5cm

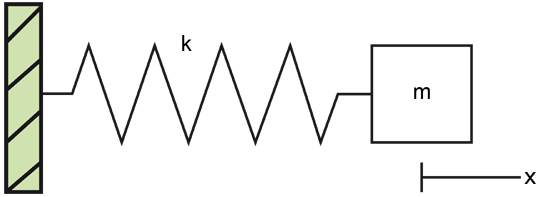

Example. Spring

In order to apply this, let's assume a simple mass-spring.

The equation of motion according to Newton's second law is

Using the previous results, we can rewrite this as:

And the equivalent Euler system is

Activity

1D

Implementation in Scipy

First order ordinary differential equations

integrate.odeint: Integrate a system of ordinary differential equations

Uso básico

Solves the initial value problem for stiff or non-stiff systems of first order ordinary differential equations:

where can be a vector. and

Example

Consider the following differential equation

where is constant and with initial condition

For a first evolution after one step of : with initial condition

while the evolution from 0 to 20 s is

Compare with the analytical solution

Since

Second order differential equations

Although first-order schemes like Euler's method are illustrative and allow a good understanding of the numerical problem, real applications cannot be dealt with them, instead more precise and accurate high-order methods must be invoked. In this section we shall cover a well-known family of numerical integrators, the Runge-Kutta methods.

Example: From http://sam-dolan.staff.shef.ac.uk/mas212/

As explained before, we need to write a second order ordinary differential equations in terms of first order matricial ordinary differential equation. In terms of a parameter, , this implay to have a column vector $$ U=\begin{bmatrix} y\\ z\\ \end{bmatrix} $$

Suppose we have a second-order ODE such as a damped simple harmonic motion equation, with parameter (instead ) We can turn this into two first-order equations by defining a new depedent variable. For example, So that where We can solve this system of ODEs using "odeint" with lists, as follows:

Let Therefore

Implementation by using only

x→0.1

The first column, Us[:,0] → y

Implementation by using explicitly and

Activity: Apply the previous example to the problem of parabolic motion with air friction:

where , and such that in two dimensions

Activity: Implemet directly: as

Example 2

In this example we are going to use the Scipy implementation for mapping the phase space of a pendulum.

The equations of the pendulum are given by:

where

TAREA: Generar un rango aleatorio uniforme de varios ordenes de magnitud. En particular, 1000 números aleatorios entre hasta

The nearly closed curves around (0,0) represent the regular small swings of the pendulum near its rest position. The oscillatory curves up and down of the closed curves represent the movement when the pendulum start at but with high enough angular speed such that the pendulum goes all the way around. Of course, its angular speed will slow down on the way up but then it will speed up on the way down again. In the absence of friction, it just keeps spinning around indefinitely. The counterclockwise motions of the pendulum of this kind are shown in the graph by the wavy lines at the top with positive angular speed, while the curves on the bottom which go from right to left represent clockwise rotations. The phase space have a periodicity of .

Small oscillation

All around

Activity: Check the anlytical expression for the period of a pendulum

Appendix

Activity

Fourth-order Runge-Kutta method

Finally, the most used general purpose method is the fourth-order Runge-Kutta scheme. Its derivation follows the same previous reasoning, however the procedure is rather long and it makes no sense to reprouce it here. Instead, we will give the direct algorithm:

Let's assume again a problem of the form:

The Runge-Kutta-4 (RK4) method allows us to predict the solution at the time as:

where:

Activity

with , and the solution shows periodic orbits.

Second-order Runge-Kutta methods

For this method, let's assume a problem of the form:

Now, we want to know the solution in the next timestep, i.e. . For this, we propose the next solution:

determining the coefficients and , we will have the complete approximated solution of the problem.

One way to determine them is by comparing with the taylor expansion around

Now, we can also expand the function around the point , yielding:

Replacing this into the original expression:

ordering the terms we obtain:

Equalling the next conditions are obtained:

This set of equations are undetermined so there are several solutions, each one yielding a different method:

ParseError: KaTeX parse error: Undefined control sequence: \matrix at position 1: \̲m̲a̲t̲r̲i̲x̲{ c_0 = 0 & c_…The algorithm is then:

with

All these methods constitute the second-order Runge-Kutta methods.

Two-Point Boundary Value Problems

Up to now we have solved initial value problems of the form:

Second order equations can be similarly planted as

This type of systems can be readily solved by defining the auxiliar variable , turning it into a first order system of equations.

Now, we shall solve two-point boundary problem, where we have two conditions on the solution instead of having the function and its derivative at some initial point, i.e.

In spite of its similar form to the initial value problem, two-point boundary problems pose a increased difficulty for numerical methods. The main reason of this is the iterative procedure performed by numerical approaches, where from an initial point, further points are found. Trying to fit two different values at different points implies then a continuous readjusting of the solution.

A common way to solve these problems is by turning them into a initial-value problem

Let's suppose some choice of , integrating by using some of the previous methods, we obtain the final boundary condition . If the produced value is not the one we wanted from our initial problem, we try another value . This can be repeated until we get a reasonable approach to . This method works fine, but it is so expensive and terribly inefficient.

Note when we change , the final boundary value also change, so we can assume . The solution to the problem can be thought then as a root-finding problem:

or

where is the residual function. This problem can be thus solved using some of the methods previously seen for the root-finding problem.

Example 3

A very simplified model of interior solid planets consists of a set of spherically symmetric radial layers, where the next properties are computed: density , enclosed mass , gravity and pressure . Each of these properties are assumed to be only radially dependent. The set of equations that rules the planetary interior is:

Hydrostatic equilibrium equation

Adams-Williamson equation

Continuity equation

Equation of state

For accurate results the term , called the adiabatic bulk modulus, is temperature and radii dependent. However, for the sake of simplicity we shall assume a constant value.

Solving simultaneously the previous set of equations, we can find the complete internal structure of a planet.

We have four functions to be determined and four equations, so the problem is solvable. It is only necessary to provide a set of boundary conditions of the form:

where is the planetary radius, the surface density, the mass of the planet, the surface gravity and the atmospheric pressure. However, there is a problem, we do not know the planetary radius , so an extra condition is required to determine this value. This is reached through the physical condition , this is, the enclosed mass at a radius (center of the planet) must be 0.

The two-value boundary nature of this problem lies then in fitting the mass function at and at . To do so, let's call the residual mass . This value should depend on the chosen radius , so a large value would imply a mass defect , and a small value a mass excess . The problem is then solving the radius for which . This can be done by using the bisection method.

For this problem, we are going to assume an one-layer planet made of perovskite, so . A planet mass equal to earth, so , a surface density and a atmospheric pressure of .

Implementation of the pendulum phase space

The phase space of a dynamical system is a space in which all the possible states of that system are univocally represented. For the case of the pendulum, a complete state of the system is given by the set , so its phase space is two-dimensional. In order to explore all the possible states, we are going to generate a set of initial conditions and integrate them.