Path: blob/master/material/numerical-calculus-integration-methods.ipynb

1307 views

Numerical approximation of integrals

The methods for numerical approximation that we will study here rely in the idea of approximate by using some polinomial , such that The integral of a polynomial can be calculated analytically because we know its antiderivative.

Since, as we seen in Lagrange-Polynomial, the next polynomial of th-degree where Given a well-behaved function , we have then

ParseError: KaTeX parse error: Expected & or \\ or \cr or \end at end of input: \begin{align} % trick notebook cell \int_a^b f(x)dx =& \int_a^b\sum_{k=0}^n f(x_k)L_{n,k}(x)dx \\ =&\sum_{k=0}^n f(x_k) \int_a^b L_{n,k}(x)dx \\ =&\sum_{k=0}^n f(x_k) \omega_k \, %ParseError: KaTeX parse error: Expected 'EOF', got '\end' at position 2: \̲e̲n̲d̲{align}where is a weight applied to each function value: Note that, since Lagrange polynomials do not depend on the function, we can calculate analically the weights using only the nodes ,

Error calculation

Including the error, the previous function can be written as

with the lagrange basis functions. Integrating over , we obtain the next expression:

It is worth mentioning this expression is a number, unlike differentiation where we obtained a function.

We can readily convert this expression in a weighted summation as

Finally, the quadrature formula or Newton-Cotes formula is given by the next expression:

where the estimated error is

Asumming besides intervals equally spaced such that , the error formula becomes:

if is even and

if is odd.

Below is a simple implementation of the quadrature formula or Newton-Cotes formula by using the implementation in scipy of the Interpolation polynomial in the Lagrangian form of order discussed in Interpolation from scipy interpolation

Trapezoidal rule

Using the previous formula, it is easily to derivate a set of low-order approximations for integration. Asumming a function and an interval , the associated quadrature formula is that obtained from a first-order Lagrange polynomial given by:

Using this, it is readible to obtain the integrate:

with and .

Simple implementation

Signature: integrate.trapz(y, x=None, dx=1.0, axis=-1)

Docstring:

Integrate along the given axis using the composite trapezoidal rule.

Integrate `y` (`x`) along given axis.

Parameters

----------

y : array_like

Input array to integrate.

x : array_like, optional

The sample points corresponding to the `y` values. If `x` is None,

the sample points are assumed to be evenly spaced `dx` apart. The

default is None.

dx : scalar, optional

The spacing between sample points when `x` is None. The default is 1.

axis : int, optional

The axis along which to integrate.

Returns

-------

trapz : float

Definite integral as approximated by trapezoidal rule.

See Also

--------

sum, cumsum

Notes

-----

Image [2]_ illustrates trapezoidal rule -- y-axis locations of points

will be taken from `y` array, by default x-axis distances between

points will be 1.0, alternatively they can be provided with `x` array

or with `dx` scalar. Return value will be equal to combined area under

the red lines.

References

----------

.. [1] Wikipedia page: http://en.wikipedia.org/wiki/Trapezoidal_rule

.. [2] Illustration image:

http://en.wikipedia.org/wiki/File:Composite_trapezoidal_rule_illustration.png

Examples

--------

>>> np.trapz([1,2,3])

4.0

>>> np.trapz([1,2,3], x=[4,6,8])

8.0

>>> np.trapz([1,2,3], dx=2)

8.0

>>> a = np.arange(6).reshape(2, 3)

>>> a

array([[0, 1, 2],

[3, 4, 5]])

>>> np.trapz(a, axis=0)

array([ 1.5, 2.5, 3.5])

>>> np.trapz(a, axis=1)

array([ 2., 8.])

File: /usr/local/lib/python3.5/dist-packages/numpy/lib/function_base.py

Type: function

In fact, as expected

For a better approximation we can use more intervals

Simpson's rule

A slightly better approximation to integration is the Simpson's rule. For this, assume a function and an interval , with a intermediate point . The associate second-order Lagrange polynomial is given by: See previous exercise

The final expression is then:

Scipy implementation

Improves with number of points:

Signature: integrate.simps(y, x=None, dx=1.0, axis=-1, even='avg')

Docstring:

`An alias of `simpson`.

`simps` is kept for backwards compatibility. For new code, prefer

`simpson` instead.

File: /usr/local/lib/python3.9/dist-packages/scipy/integrate/_quadrature.py

Type: function

Activity: Implement the Simpson method here or here by generalizing the previous Quadrature function to one that accepts lists of any size. Compares with the Scipy implementation for the next integral with a linspace of 100 points.

Hint: Note that the intervals for each 3 points can be obtained by slicing in steps of 2:

In this case the integration with Lagrange interpolation polynomials of degree 3 works much better:

Activity

Using the trapezoidal and the Simpson's rules, determine the value of the integral (4.24565)

Take the previous routine Quadrature and the above function and explore high-order quadratures. What happends when you increase the number of points?

N=20 def f(x): return 1+np.cos(x)**2+x

#Quadrature with N points (Simpson's rule) X = np.array(np.linspace(-0.5,1.5,N)) Ln=Quadrature( f, X, xmin=-1, xmax=2, ymin=0, ymax=4 ) integrate.simps(Ln(X),X) print(poly1d(Ln))

Activity

Approximate the following integrals using formulas Trapezoidal and Simpson rules. Are the accuracies of the approximations consistent with the error formulas?

Composite Numerical Integration

Although above-described methods are good enough when we want to integrate along small intervals, larger intervals would require more sampling points, where the resulting Lagrange interpolant will be a high-order polynomial. These interpolant polynomials exihibit usually an oscillatory behaviour (best known as Runge's phenomenon), being more inaccurate as we increase .

An elegant and computationally inexpensive solution to this problem is a piecewise approach, where low-order Newton-Cotes formula (like trapezoidal and Simpson's rules) are applied over subdivided intervals. This methods are already implemented in the previous scipy trapezoidal and Simpsons implementations. An internal implementation is given in the [Appendix of integration](./Appendix.ipynb#composite-numerical integration), the Composite Trapezoidal rule given by:

and the Composite Simpson's rule given by

Composite trapezoidal rule

This formula is obtained when we subdivide the integration interval within sets of two points, such that we can apply the previous Trapezoidal rule to each one.

Let be a well behaved function (), defining the interval space as , where N is the number of intervals we take, the Composite Trapezoidal rule is given by:

for some value in .

Activity

Determine the value of the integral (4.24565)

Activity

An experiment has measured , the number of particles entering a counter, per unit time, as a function of time. Your problem is to integrate this spectrum to obtain the number of particles that entered the counter in the first second

For the problem it is assumed exponential decay so that there actually is an analytic answer.

Compare the relative error for the composite trapezoid and Simpson rules. Try different values of N. Make a logarithmic plot of N vs Error.

Adaptive Quadrature Methods

Calculating the integrate of the function within the interval , we obtain:

Using composite numerical integration is not completely adequate for this problem as the function exhibits different behaviours for differente intervals. For the interval the function varies noticeably, requiring a rather small integration interval . However, for the interval variations are not considerable and low-order composite integration is enough. This lays a pathological situation where simple composite methods are not efficient. In order to remedy this, we introduce an adaptive quadrature methods, where the integration step can vary according to the interval. The main advantage of this is a controlable precision of the result.

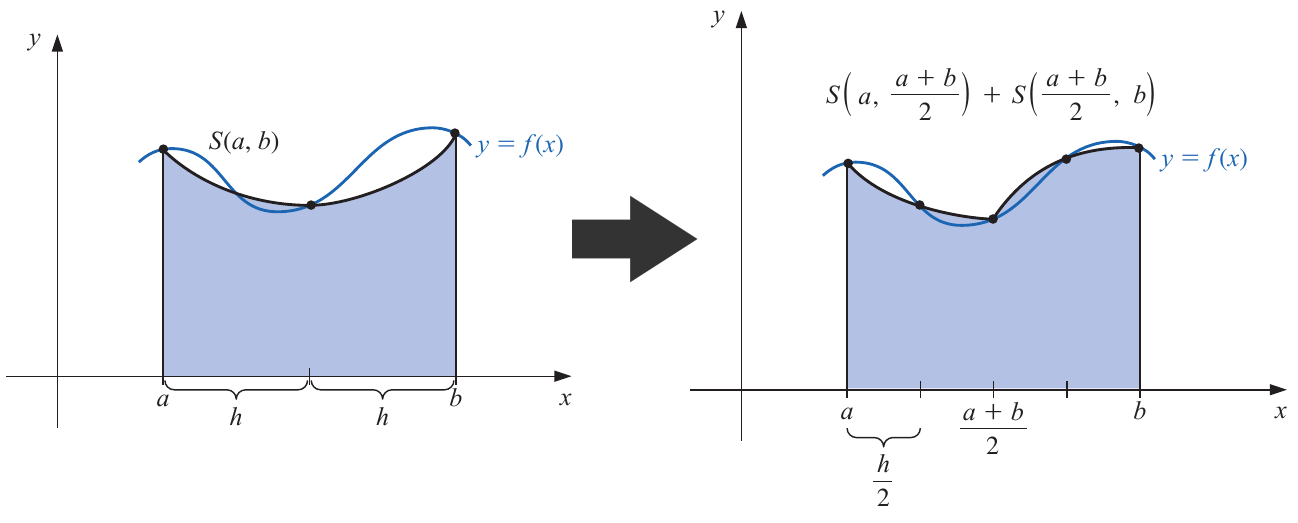

Simpson's adaptive quadrature

Although adaptive quadrature can be readily applied to any quadrature method, we illustrate the method with the Simpson's adaptive quadrature.

Let's assume a function . We want to compute the integral within the interval . Using a simple Simpson's quadrature, we obtain:

where we introduce the notation:

and is simply .

Now, instead of using an unique Simpson's quadrature, we implement two, by adding a new point in the midle of the interval , , yielding:

For this expression, we reasonably assume an equal fourth-order derivative , where is the estimative for the first subtinterval (i.e. ), and for the second one (i.e. ).

As both expressions can approximate the real value of the integrate, we can equal them, obtaining:

which leads us to a simple way to estimate the error without knowing the fourth-order derivative, i.e.

If we fix a precision , such that the obtained error for the second iteration is smaller

it implies:

and

The second iteration is then times more precise than the first one.

Steps Simpson's adaptive quadrature

1. Give the function to be integrated, the inverval and set a desired precision .

2. Compute the next Simpsons's quadratures:

3. If

then the integration is ready and is given by:

within the given precision.

4. If the previous step is not fulfilled, repeat from step 2 using as new intervals and and a new precision . Repeating until step 3 is fulfilled for all the subintervals.