Path: blob/master/site/en-snapshot/addons/tutorials/layers_weightnormalization.ipynb

38541 views

Copyright 2020 The TensorFlow Authors.

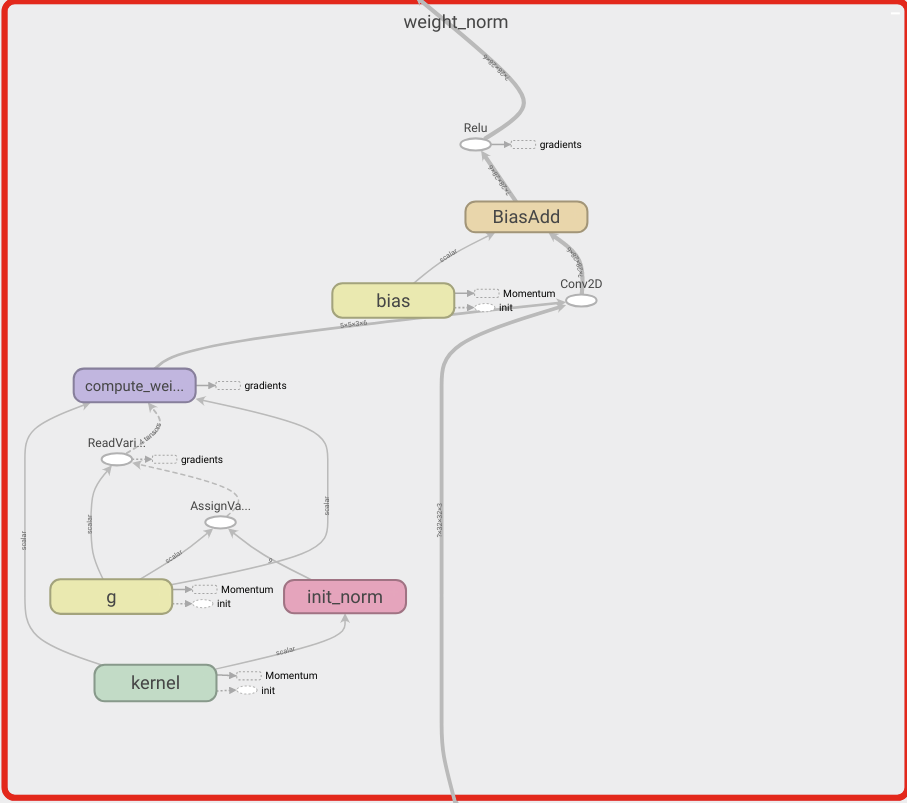

TensorFlow Addons Layers: WeightNormalization

Overview

This notebook will demonstrate how to use the Weight Normalization layer and how it can improve convergence.

WeightNormalization

A Simple Reparameterization to Accelerate Training of Deep Neural Networks:

Tim Salimans, Diederik P. Kingma (2016)

By reparameterizing the weights in this way you improve the conditioning of the optimization problem and speed up convergence of stochastic gradient descent. Our reparameterization is inspired by batch normalization but does not introduce any dependencies between the examples in a minibatch. This means that our method can also be applied successfully to recurrent models such as LSTMs and to noise-sensitive applications such as deep reinforcement learning or generative models, for which batch normalization is less well suited. Although our method is much simpler, it still provides much of the speed-up of full batch normalization. In addition, the computational overhead of our method is lower, permitting more optimization steps to be taken in the same amount of time.

View on TensorFlow.org

View on TensorFlow.org Run in Google Colab

Run in Google Colab View source on GitHub

View source on GitHub Download notebook

Download notebook