Path: blob/master/site/zh-cn/addons/tutorials/layers_weightnormalization.ipynb

38482 views

Kernel: Python 3

Copyright 2020 The TensorFlow Authors.

In [ ]:

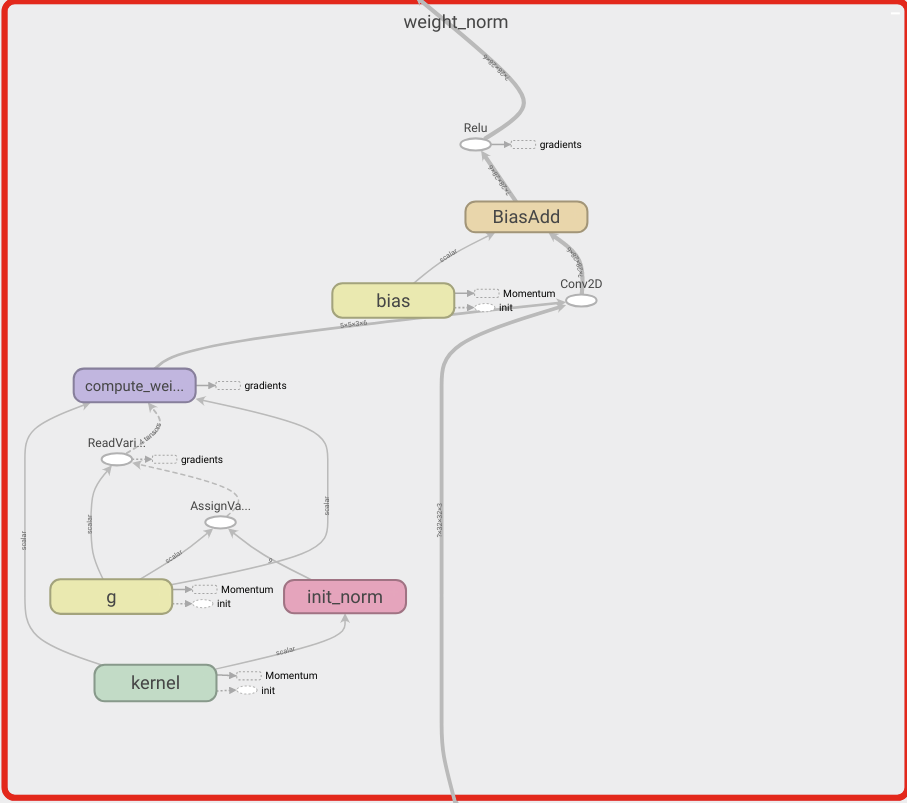

TensorFlow Addons 层:WeightNormalization

概述

此笔记本将演示如何使用权重归一化层以及如何提升收敛。

WeightNormalization

用于加速深度神经网络训练的一个简单的重新参数化:

Tim Salimans、Diederik P. Kingma (2016)

通过以这种方式重新参数化权重,我们改善了优化问题的条件,并加快了随机梯度下降的收敛速度。我们的重新参数化受到批次归一化的启发,但没有在小批次中的样本之间引入任何依赖项。这意味着我们的方法也可以成功应用于递归模型(例如 LSTM)和对噪声敏感的应用(例如深度强化学习或生成模型),而批次归一化则不太适合这类模型和应用。尽管我们的方法要简单得多,但它仍可很大程度上为完整批次归一化提供加速。另外,我们方法的计算开销较低,从而允许在相同的时间内执行更多的优化步骤。

设置

In [ ]:

In [ ]:

In [ ]:

In [ ]:

构建模型

In [ ]:

In [ ]:

加载数据

In [ ]:

训练模型

In [ ]:

In [ ]:

In [ ]:

在 TensorFlow.org 上查看

在 TensorFlow.org 上查看  在 Google Colab 中运行

在 Google Colab 中运行  View source on GitHub

View source on GitHub Download notebook

Download notebook