5. Linear Regression

5.1 Introduction

For many physical systems, the effect you’re investigating has a simple dependence on a single cause. The simplest interesting dependence is a linear one, where the cause (described by ) and the effect (described by ) are related by

As you probably recognize, this is a linear relationship. If you plot vs. , the resulting graph would be a straight line.

For example, suppose that you are traveling by car and watching the speedometer closely to stay at a constant speed. If you were to record the odometer reading as a function of the amount of time that you’ve been on the road, you would find that a graph of your results was a straight line. In this example, it would be helpful to rewrite that general linear equation (5.1) to fit the specific physical situation and give physical interpretations of all the symbols. We could write the equation as

where is the odometer reading at time , is the speed, and is the initial odometer reading (at ).

Suppose your odometer works correctly, but your speedometer isn’t working properly so that the number the needle is pointing to is not really the speed of the car. It’s working well enough that if you keep the needle pinned at 60 mph, your car is traveling at some constant speed, but you just can’t be confident that the constant speed is in fact 60 mph. You could determine your speed by recording the value that the odometer registers at several different times. If both your odometer and your clock were ideal measuring devices, able to register displacement and time without experimental uncertainty, a graph of the odometer values versus time would lie along a perfectly straight line. The slope of this line would be the true speed corresponding to your chosen constant speedometer reading.

Of course, your time and distance measurements will always include some experimental uncertainty. Therefore, your data points wll not all lie exactly on the line. The purpose of this chapter is to describe a procedure for finding the slope and intercept of the straight line that “best” represents your data in the presence of the inevitable experimental uncertainty of your measurements. The process of determining such a “best fit” line is called linear regression. Note that it is far better to use a best fit line to a set of data instead of calculating the speed using single measurements of the distance and time. Linear regression allows us to use multiple measurements at once. As you'll see in the next section, it will also allow you to determine the uncertainty of the speed.

5.2 Theory

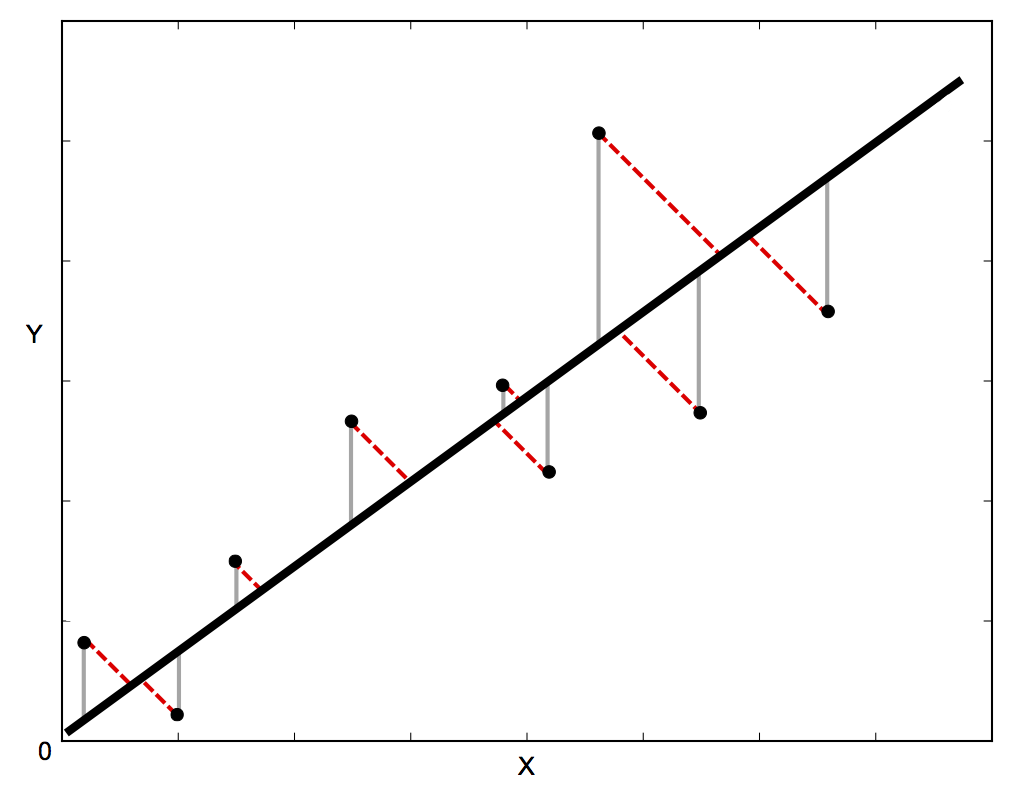

Suppose that you want to fit a set data points , where , to a straight line, . This involves choosing the parameters and to minimize the sum of the squares of the differences between the data points and the linear function. The differences are usual defined in one of the two ways shown in figure 5.1. If there are uncertainties in only the y direction, then the differences in the vertical direction (the gray lines in the figure below) are used. If there are uncertainties in both the and directions, the orthogonal (perpendicular) distances from the line (the dotted red lines in the figure below) are used.

Figure 5.1: The difference between the black line and the data points can be defined as shown by the gray lines or by the dotted red lines. (Image from http://blog.rtwilson.com/orthogonal-distance-regression-in-python/)

For the case where there are uncertainties in only the y direction, there is an analytical solution to the problem. If the uncertainty in is , then the difference squared for each point is weighted by . If there are no uncertainties, each point is given an equal weight of one. The function to be minimized with respect to variations in the parameters, and , is

The analytical solutions for the best-fit parameters that minimize (see pp. 181-189 of An Introduction to Error Analysis: The Study of Uncertainties in Physical Measurements by John R. Taylor, for example) are

and The uncertainties in the parameters are

and All of the sums in the four previous equations are over from 1 to .

For the case where there are uncertainties in both and , there is no analytical solution. The complex method used is called orthogonal distance regression (ODR).

5.3 Implementation in Python

Calculating the parameters for the best fit line and their uncertainties by hand using equations 5.3-5.6 would be tedious, so it is better to let a computer do the work. The linear_fit function that performs these calculations is defined in the file fitting.py. The file must be located in the same directory as the Python program using it. If there are no uncertainties or only uncertainties in the direction, the analytical expressions above are used. If there are uncertainties in both the and directions, the scipy.odr module is used.

An example of performing a linear fit with uncertainties in the direction is shown below. The first command imports the function. Arrays containing the data points ( and ) are sent to the function. If only one array of uncertainties (called in the example) is sent, they are assumed to be in the direction. In the example, the array function (from the pylab library) is used to turn lists into arrays. It is also possible to read data from a file. The fitting function returns the best-fit parameters (called and in the example), their uncertainties (called and in the example), the reduced chi squared, and the degrees of freedom (called and in the example). The last two quantities are defined in the next section.

Plotting data with error bars and a best-fit line together gives a rough idea of whether or not the fit is good. If the line passes within most of the error bars, the fit is probably reasonably good. The first line of code below makes a list of 100 points between the minimum and maximum values of in the data. The second line of code calculates the value of for the best-fit line in the figure.

An example of performing a linear fit with uncertainties in both the and directions is shown below. Arrays containing the data points ( and ) and their uncertainties ( and ) are sent to the function. Note the order of the uncertainties! The uncertainty in is optional, so it is second. This is also consistent with the errorbar function (see below).

Again, you should plot the data with error bars and a best-fit line together to see whether or not the fit is good. When plotting the data with errorbars in both directions, the array of uncertainties in the direction () comes before the array of uncertainties in the direction () in the errorbar function. In this case, points were not plotted for the data because they would hide the smallest error bars.

5.4 Interpreting the Results

It is possible to find the “best” line for your data, even when your data do not resemble a line at all. There is no substitute for actually looking at the graphed data to check that it looks like a reasonably straight line. For General Physics III (PHYS 233), visually checking that the best-fit line fits the data reasonably well is sufficient. Ideally, the line would pass through all of the error bars. For Advanced Experimental Phyiscs (PHYS 349), you should also look at the additional information returned by the fitting funciton.

The reduced chi squared and the degrees of freedom can also be used to judge the goodness of the fit. If is the number of data points and is the number of parameters (or constraints) in the fit, the number degrees of freedom is

For a linear fit, because there two parameters for a line. The reduced chi squared is defined as

According to Taylor (p. 271), “If we obtain a value of of order one or less, then we have no reason to doubt our expected distribution; if we obtain a value of much larger than one, our expected distribution is unlikely to be correct.”

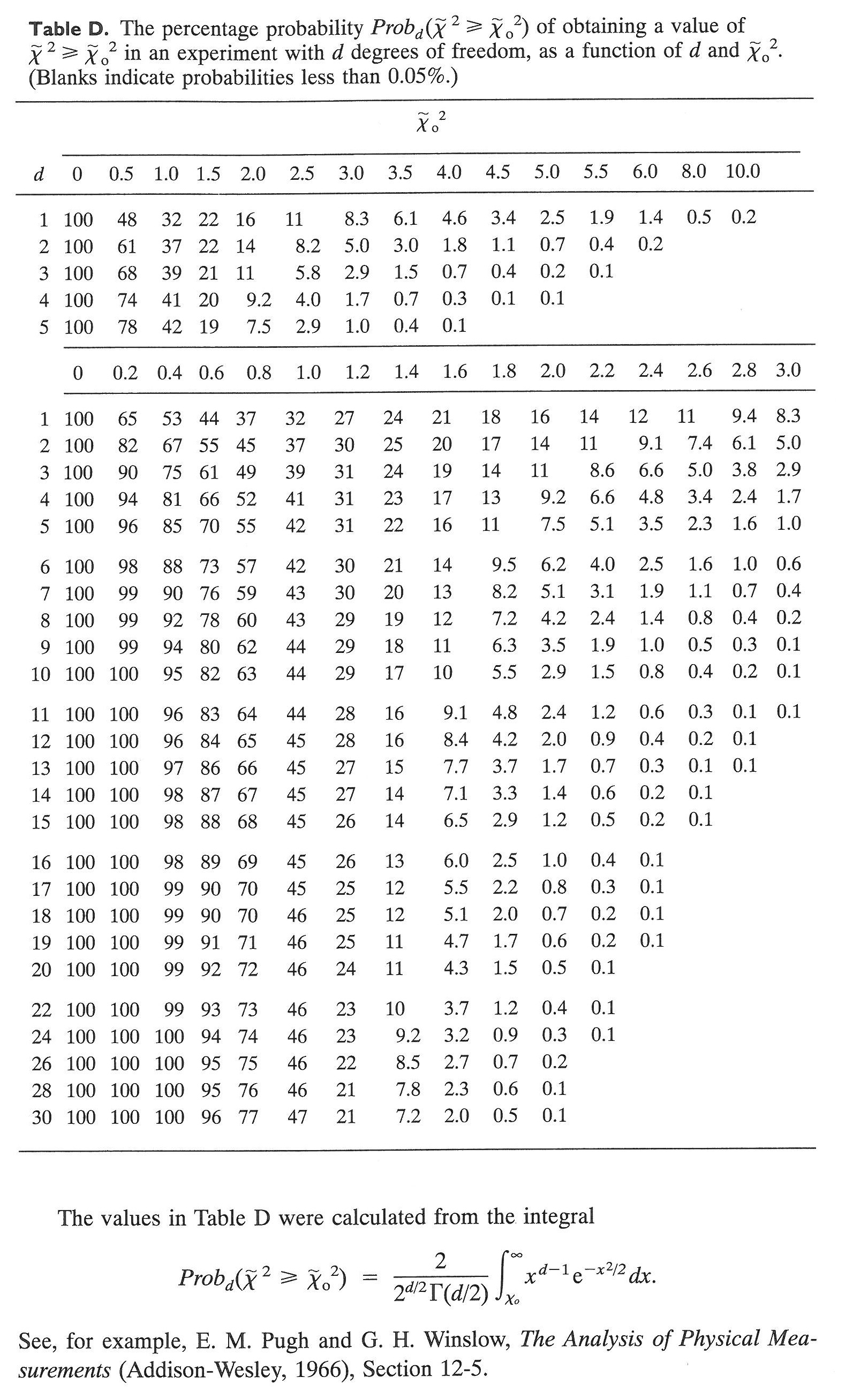

For an observed value (from fitting data) of the reduced chi square (), you can look up the probability of randomly getting a larger with degrees of freedom on the table below (from Appendix D of Taylor’s book). A typical standard is to reject a fit if

In other words, if the reduced chi squared for a fit is unlikely to occur randomly, then the fit is not a good one. In the first example above, five data points are fit with a line (two fit parameters), so . The observed reduced chi squared is , so the table gives

and there is no reason to reject the fit.