Curve Fitting

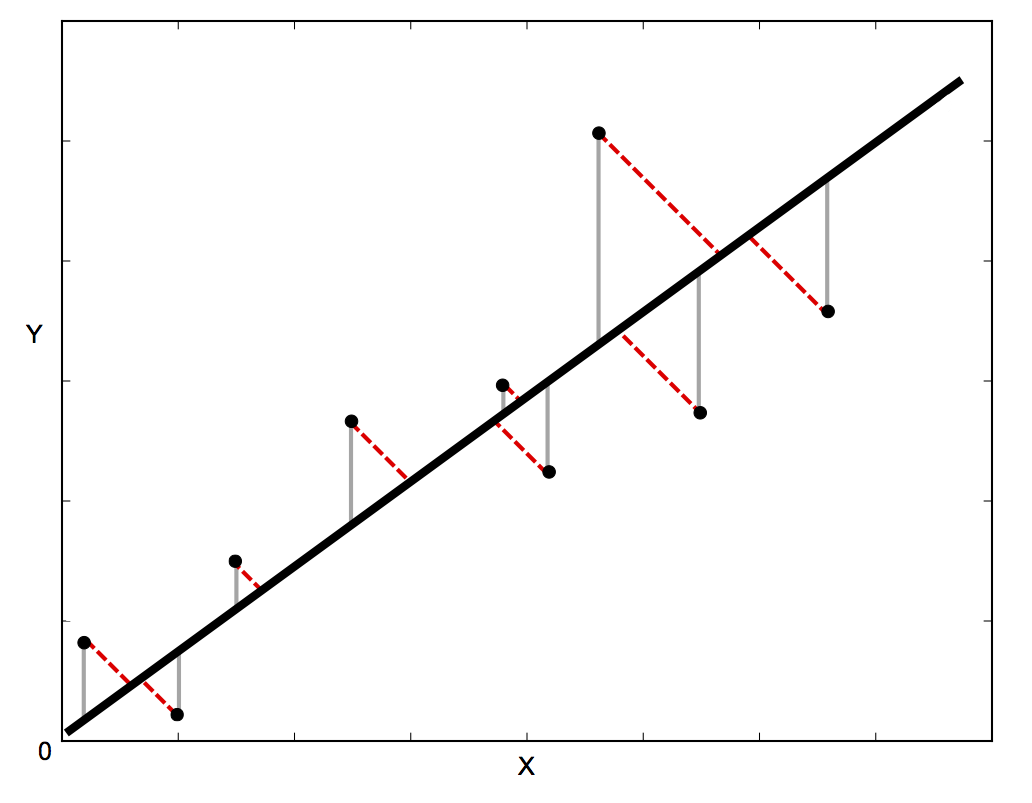

Suppose that you want to fit a set of data points , where , to a function that can't be linearized. For example, the function could be a second-order polynomial, . There isn’t an analytical expression for finding the best-fit parameters (, , and in this example) as there is for linear regression with uncertainties in one dimension. The usual approach is to optimize the parameters to minimize the sum of the squares of the differences between the data and the function. How the difference are defined varies. If there are only uncertainties in the y direction, then the differences in the vertical direction (the gray lines in the figure below) are used. If there are uncertainties in both the and directions, the orthogonal (perpendicular) distances from the line (the dotted red lines in the figure below) are used.

For the case where there are only uncertainties in the y direction, if the uncertainty in is , then the difference squared for each point is weighted by . If there are no uncertainties, each point is given an equal weight of one and the results should be used with caution. The function to be minimized with respect to variations in the parameters is For the case where there are uncertainties in both and , the function to be minimized is more complicated.

The general_fit function that performs this minimization is defined in the file "fitting.py" which must be in the same drectory as the Python program. If there are only uncertainties in the direction, it uses the curve_fit function from the "scipy" library to find the best-fit parameters. If there are uncertainties in both and , the odr package from the "scipy" library is used.

An example of performing a fit with uncertainties in only the direction is shown below. The first command imports the general_fit function. Second, the function (fitfunc) to be fit is defined. The function could have more than 3 parameters. Inital guesses at the parameters are placed in the list . The name of the fitting function, arrays containing the data points ( and ), the initial guesses at the parameters, and an array of uncertainties () are sent to the general_fit function. If it succeeds, the general_fit function returns arrays with the optimal parameters and estimates of their uncertainties (in lists called and ), the reduced chi squared (), and the degrees of freedom ().

For this example, the optimal parameter are

and their uncertainties are

If the initial guesses at the parameters are not reasonable, the optimization may fail. It is often helpful to plot the data first to help make a good guesses at the parameters.

If your performing a fit with uncertainties in both the and direction, arrays containing the data points ( and ) and their uncertainties ( and ) are sent to the general_fit function as follows:

popt, punc, rchi2, dof = general_fit(fitfunc, x, y, p0, yerr, xerr)

Note the order of the uncertainties! The uncertainty in is optional, so it is second. This is also consistent with the errorbar function.

Intrepeting the Results

Plotting data with error bars and a best-fit function together gives some idea of whether or not the fit is good. If the curve passes within most of the error bars, the fit is probably reasonably good. The first line below makes a list of 100 points between the minimum and maximum values of in the data. In the second line below, all of the parameters are sent to the fitting function at once using a pointer (using an asterisk in front of the name).

The reduced chi squared and the degrees of freedom can also be used to judge the goodness of the fit. If is the number of data points and is the number of parameters (or constraints) in the fit, the number degrees of freedom is In the example, because there three parameters in the function. The reduced chi squared is defined as According to Taylor (p. 271), “If we obtain a value of of order one or less, then we have no reason to doubt our expected distribution; if we obtain a value of much larger than one, our expected distribution is unlikely to be correct.” For an observed value (from fitting data) of the reduced chi square (), you can look up the probability of randomly getting a larger with degrees of freedom on the table below (from Appendix D of Taylor’s book). A typical standard is to reject a fit if In other words, if the reduced chi squared for a fit is unlikely to occur randomly, then the fit is not a good one. In the example above, six data points are fit and . Since , the table gives and there is no reason to reject the fit.

From Error Analysis by John Taylor

Additional Documentation

More information is available at: http://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.curve_fit.html https://docs.scipy.org/doc/scipy/reference/odr.html